Hey guys,

It's been some time since I've released anything new here since YellowBot last year so I hope you enjoy this.

Proxy Goblin

A Proxy Scraper with a Sick Automation mode.

Mix & Match tasks like Sending Email with Proxies, Executing Python Commands, Executing MS-DOS Batch Commands, Uploading to Remote FTP Location, Saving proxies to disk.

Just set the minimum proxy level requirements, create a few tasks, set the interval period and let the Goblin run continuously.

:food-smiley-002:

I’ve also made the software completely customizable.

So you can tweak almost every setting to your liking.

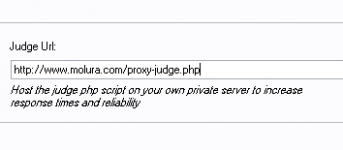

Modify everything from timeouts, judge urls, max connections, etc.

Easily add additional url sources to scrape proxies from, and if you don’t like the inbuilt sources, you can also chose to only scrape your urls.

You can also easily blacklist ip addresses and hostnames using wildcards. Advanced users can use PCRE flavoured regex for more control.

And these are just some of the features that I’ve mentioned.

How does it Work?It's been some time since I've released anything new here since YellowBot last year so I hope you enjoy this.

Proxy Goblin

A Proxy Scraper with a Sick Automation mode.

Mix & Match tasks like Sending Email with Proxies, Executing Python Commands, Executing MS-DOS Batch Commands, Uploading to Remote FTP Location, Saving proxies to disk.

Just set the minimum proxy level requirements, create a few tasks, set the interval period and let the Goblin run continuously.

:food-smiley-002:

I’ve also made the software completely customizable.

So you can tweak almost every setting to your liking.

Modify everything from timeouts, judge urls, max connections, etc.

Easily add additional url sources to scrape proxies from, and if you don’t like the inbuilt sources, you can also chose to only scrape your urls.

You can also easily blacklist ip addresses and hostnames using wildcards. Advanced users can use PCRE flavoured regex for more control.

And these are just some of the features that I’ve mentioned.

Schedule an unlimited number of tasks, and let the scraper run continuously. Sit back and let your proxy list grow.

How Can I Use it?

An example campaign could go like this but it’s only limited by your creativity.

- Gather proxies

- Scrub & filter

- Send email with proxy list

- Upload list to FTP Server

- Pause for 10 minutes and repeat

Mix & Match Tasks In Automation Mode

Send an email to yourself or multiple recipients with the filtered proxies.

Save to Disk

Save filtered proxies to a CSV or simple text file. Placeholders, like %date% can be used in the filenames. If the file exists, you can even choose to append or overwrite.

Save to Remote FTP Location

Same as save to disk but to a remote FTP location. Great when you have a cron on your server that reads the text file. Constantly have fresh proxies.

Execute Python / MS-DOS Custom Commands

There is almost nothing you can’t do with this feature. You can write custom scripts in either ms-dos’s batch flavour or python. Something sweet for advanced users. :jester:

How Much? :music06:

Use the following discount code to get over 60% off the retail price of $67.

Coupon Code: WICKEDFIRE

Price After Discount:

Only $27.00

[Click Here To Download The Goblin]

* Don't forget to enter the coupon code & hit the "Checkout" button in bottom right corner of the page

All the best and I hope you enjoy using the Goblin!

Cheers,

Ash

Additional Screen-shots:

Manual Mode

Settings - Additional Sources

Settings - Blacklists

Taks - Python Commands

Task - Dos Commands

Task - Email

Task - Ftp Proxies

Task - Save Proxies To Disk - Filename Placeholders

Task - Save Proxies To Disk

Tasks Overview - After adding 4 tasks

Tasks Overview