As data is increasingly generated at the edge — from cameras, sensors, IoT devices, autonomous vehicles, and mobile phones — sending all this data back to centralized servers is no longer viable.

According to IDC (2024):

By 2027, over 55% of AI data will be processed at the edge rather than in centralized clouds.

This shift demands a new kind of network architecture that supports:

- Ultra-low latency (sub-millisecond)

- On-site data processing to offload the cloud

- Optimized bandwidth usage and on-prem security

Press enter or click to view image in full size

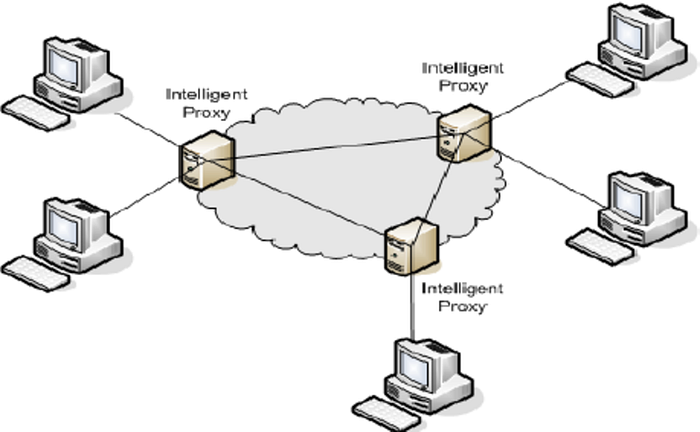

A Distributed Proxy Network deploys proxy nodes across edge locations instead of central hubs. With real-time AI integration, it enables:

- Localized data routing and behavioral filtering

- On-site compression, encryption, and initial data preprocessing

- Immediate AI inference from lightweight models deployed at the edge

- Avoidance of unnecessary data backhaul to central servers

Press enter or click to view image in full size

- Each vehicle produces >40TB of data daily (cameras, LIDAR, radar)

- Previously, most data was transmitted to centralized servers for processing

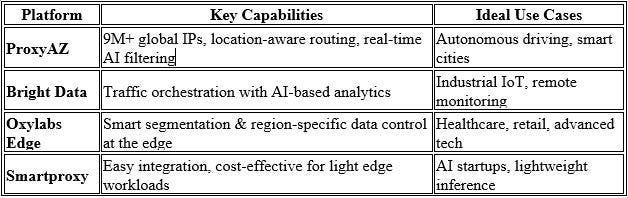

- Deployed ProxyAZ nodes across maintenance hubs

- Integrated edge-level AI to filter and classify data in real time

- Only critical or training-related data is sent back to the cloud

- Medical IoT devices generate real-time patient vitals 24/7

- Instant response required (e.g., for abnormal heart rate alerts)

- Smart proxies deployed inside the hospital

- AI models trained on local datasets trigger immediate alerts

- Only mission-critical events are sent to central systems

Edge AI architecture isn’t just about the model — it’s about how we capture, process, and secure data at the point of origin.

- Reduced processing and storage costs

- Faster AI response times

- Enhanced on-site data privacy and compliance

- Scalable regional deployment with resilient architecture

“Smarter AI Starts at the Proxy Layer — A DevOps Perspective on Model-Ready Data”

#EdgeAI #DistributedProxy #ProxyAZ #RealTimeAI #SmartCities #AIInfrastructure #EdgeComputing #CTOStrategy #BandwidthOptimization #IoTData #AIPrivacy #DataProcessing #LowLatencyAI #AI2025 #AutonomousDriving #SmartHospitals #AIatTheEdge